For part I, see De-identifying Data for Confidentiality - Part I

De-identifying Field Data

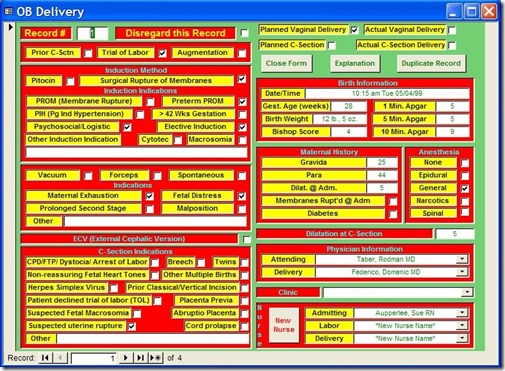

In certain instances, simply randomizing records is not sufficient. Certain field values have inherent information regardless of the record that holds them. Phone numbers, social security number, patient numbers, and the like all contain information all by themselves that may breach confidentiality. HIPAA regulations in particular are very strict about displaying any data that could identify a patient.

So to further mask your data, it may be necessary to scramble character data with in the field itself. Now, it doesn't make sense to randomize some types of fields. Name and address fields in particular will look strange if randomized in this way.

CustomerID | CustomerNumber | FirstName | LastName |

1 | 0A30 | Rgroe | arConsl |

2 | 450A | aSm | rianMt |

3 | 14A0 | aharS | ltnrSadueh |

Not only does it look strange, the capitalization of the names makes it fairly easy to re-identify the field values. So this function needs to be used with some discretion. It is best used with character data that is composed of numbers.

A further complication is that sometimes character data uses an input mask that displays characters that may either be stored or not. For instance, when you see a social security number in a field 111-22-3333, you don't really know if the number was stored as displayed or stored as 111223333. If the dashes are stored, the number might be randomized as 2-33311-13. So any function that randomizes character data must take these complications into account.

Overview of the Process

This process is similar to the record randomization process, but it only works on character data. Instead of creating a temporary table that holds all the records, we'll create two temporary string variables, one to hold value of the field we want to randomize and another to hold the randomized value. Then we'll grab random characters from the Source string and append them one at time into the Target string. We'll start at the top record of the main table, randomize the character string, write it back into the record, then proceed through the entire table until all values of the field have been randomized.

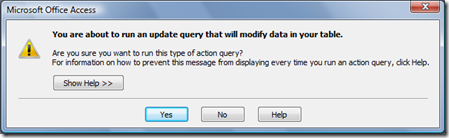

Unlike the record randomization process, this process will work on fields with Unique Indexes and Primary Keys. In fact, if a field has a unique index, you should not remove it. It is entirely possible to create duplicate records at random, so I will include error trapping to identify duplicate records. If one is discovered, it will randomize the string again until it creates a non-duplicate field value.

While this process will work on a Primary Key field, you should be careful. If this field participates in a Relationship, you will lose relational integrity. That is, the values in the primary key will no longer match values in the foreign key of the related table. If you do this, you should make certain your relationships have Cascade Updates property set to Yes.

This is not an issue, however, if your Primary Key is an Autonumber field for two reasons. One, an Autonumber is a long integer rather than character data, and secondly, you cannot change the value of an Autonumber.

Randomizing Character Fields

Sub RandomizeCharacterField _

(TableName As String, _

FieldName As String, _

FieldFormat As Variant)

'***This subroutine randomizes the characters of the

' indicated field in the selected table. It requires

' a Reference to Microsoft DAO 3.6 Object Library

On Error GoTo Err_RandomizeCharacterField

Dim db As DAO.Database

Dim rsTableName As DAO.Recordset

Dim i As Integer, StrLen As Integer

Dim strSource As String

Dim strTarget As String

Dim LeftSource As String

'*** used with the RND() function to return a random number

Randomize

'*** open the database

Set db = CurrentDb

'*** open the source table (tblRandom)

Set rsTableName = db.OpenRecordset(TableName)

'*** If the field format is Null, set it to the empty string

FieldFormat = Nz(FieldFormat, "")

'Loop thru the table, starting with the first record

Do Until rsTableName.EOF

'return label: used if a duplicate is produced

ReRandomize:

'*** set temp string to the field value

' and set receiving string to the empty string

strSource = rsTableName(FieldName)

strTarget = ""

'*** Repeat loop until all characters have been

' removed from temp string

Do While Len(strSource) > 0

StrLen = Len(rsTableName(FieldName))

'*** select a character position at random

If StrLen <> 1 Then

i = Int((StrLen - 1) * Rnd + 1)

End If

'*** grab the selected character and append

' it to the target string

If InStr(FieldFormat, Mid(strSource, i, 1)) = 0 Then

strTarget = strTarget & Mid(strSource, i, 1)

End If

'*** delete the selected character from the Source String

strSource = Left(strSource, i - 1) & _

Mid(strSource, i + 1)

Loop

'*** when the Target String is complete, write it back to

'*** the original table

rsTableName.Edit

'*** apply the appropriate format to the string

rsTableName(FieldName) = Format(strTarget, FieldFormat)

rsTableName.Update

'*** proceed to the next record

rsTableName.MoveNext

Loop

MsgBox "Field: " & FieldName & " from Table: " & _

cboTable & " has been character scrambled."

'*** Clean up object variables

rsTableName.Close

db.Close

Set rsTableName = Nothing

Set db = Nothing

Exit_RandomizeCharacterField:

Exit Sub

Err_RandomizeCharacterField:

If Err.Number = 3022 Then

'*** if there is a duplicate value in a field

' with a unique index, randomize it again

Resume ReRandomize

Else

MsgBox Err.Description

MsgBox Err.Number

Resume Exit_RandomizeCharacterField

End If

End Sub

Calling the Routine

In the Character Randomizing routine above, I have a line of code that applies the appropriate format. However, I don't say how you'll know what the appropriate format is. That's because we'll decide this in the calling routine.

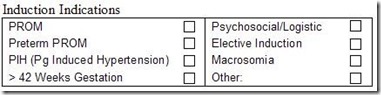

Whether we send a format string to the randomization routine depends on whether the field has an input mask. It also depends on what kind of input mask it has.

There are three possibilities for the InputMask property of a field: an input mask that saves the characters, an input mask that does not save display characters, and no input mask at all. However for our purposes, an input mask that does not save characters is the same as no input mask at all. So all we have to test for is an input mask that saves display characters.

An input mask has three sections. The first is the input mask itself. The second is tells the mask whether to save the display characters or not, and the third tells what kind of placeholder character the input mask will display. Semi-colons divide each section.

We are only concerned here with the second section. If the input mask saves the character, the second section holds a zero. A one or nothing indicates the mask will not save the display characters. Therefore, we can test the input mask for ";0;". If this string does not appear in the input mask, the display characters are not saved.

The InputMask property is one of those properties that don't exist for the object unless a value is assigned to it. So if a field does not have an input mask, trying to read the property will produce an error. Therefore, I will trap for that error (3270) to cover the case where there is no input mask.

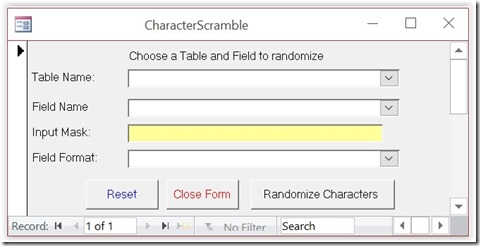

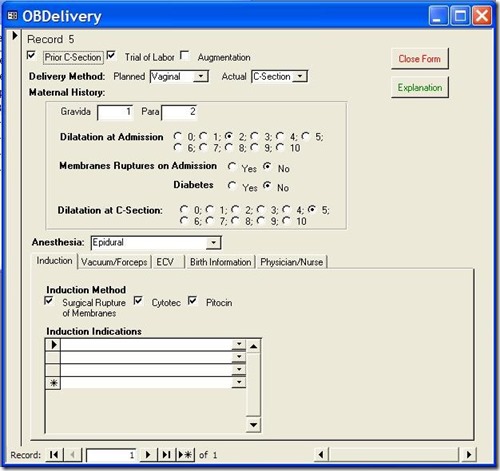

Again, how you implement this code depends on how it is going to be used. As with the Record Randomizing routine, I chose to create a form with combo boxes on the form (cboTable and cboFieldName) to hold the table, field names, and one for the format. I also added a control that displays the input mask for identification purposes.

The following code uses such a set up and would be in the On Click event of the cmdRunCharRandomization command button of the form.

Private Sub cmdRunCharRandomization_Click()

'*** This code is the calling routine.

On Error GoTo Err_cmdRunCharRandomization_Click

Dim db As DAO.Database

Dim tdf As DAO.TableDef

Dim fld As DAO.Field

Dim Mask As String

Set db = CurrentDb

Set tdf = db.TableDefs(cboTable)

Set fld = tdf.Fields(cboFieldName1)

'*** Read the InputMask property of the field

Mask = fld.Properties("InputMask")

'*** if the InputMask of the field shows that extra

' characters are saved, call the randomization

' routine and include the FieldFormat string.

If InStr(Mask, ";0;") > 0 Then

Call RandomizeCharacterField( _

cboTable, cboFieldName1, cboFieldFormat)

'*** but if the InputMask shows characters are not

' saved or if there is no InputMask, call the

' randomization routine without sending the

' FieldFormat string.

Else

Call RandomizeCharacterField( _

cboTable, cboFieldName1, "")

End If

Exit_cmdRunCharRandomization_Click:

Exit Sub

Err_cmdRunCharRandomization_Click:

'*** If there is no mask, it will return a

' Property Not Found error

If Err.Number = 3270 Then

Resume Next

ElseIf Err.Number = 3265 Then

MsgBox "Please select both a table and a field"

Resume Exit_cmdRunCharRandomization_Click

Else

MsgBox Err.Description

MsgBox Err.Number

Resume Exit_cmdRunCharRandomization_Click

End If

End Sub

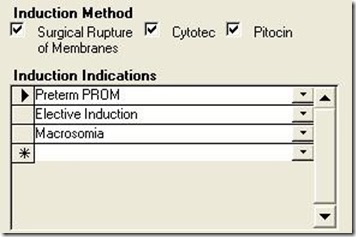

Figure 2: Example form that could be used to run the character randomization code.

Using a form like above allows you to select a field to scramble. You can select, in turn, all of fields with identifying data. On my website, you can find a small sample database called CharacterScramble.accdb, which demonstrates this process, including the calling form.

One caution. If you are randomizing fields to de-identify data to comply with regulatory rules, you should get approval for using this process with your regulatory compliance officer. For instance, HIPAA rules specify that if you de-identify data, you must use an algorithm which prevents the data from being reconstructed. Since this routine selects characters at random, I believe it complies with this instruction, but only your regulatory officer would know for sure.

Conclusion

There are many reasons for masking, blinding, scrambling, or de-identifying data within a database. Creating realistic data for demonstration purposes and masking data to comply with regulatory rules are just two. But with a little programming expertise, it doesn't have to be an onerous task.